The AI landscape is moving fast, and while there are plenty of off-the-shelf tools and quick-fix tutorials out there, building a truly robust, adaptable AI solution for business often requires a different approach. I’ve found that relying on a single platform, even one that offers some customisation, can lead to hitting walls when you need the AI to genuinely fit *your* business processes.

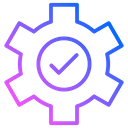

That’s why I’ve focused on a decoupled tech stack – separating the user interface, the core logic, and the data storage. This stack uses Svelte for a flexible UI, n8n for powerful, customisable automation logic, and Supabase for data management and real-time capabilities. This isn’t an off-the-shelf product; it’s a framework designed for flexibility and ownership, built to adapt as the AI world keeps changing.

Here are some of the features I’ve built into this custom stack that make it stand out:

A Truly Flexible and Customisable UI

Unlike platforms where the UI is tied to the backend logic, my approach keeps the UI layer purely focused on user interaction. This means the frontend is lightweight, fast, and crucially, easily customisable. Want a different look and feel? Need a specific button or visual feedback? Because the UI is decoupled, customisation isn’t an expensive, slow process – you have full control. I’ve even demonstrated this flexibility by swapping out the Svelte frontend for others like OpenWeb UI, Slack, and even Telegram, all connecting to the same powerful n8n backend.

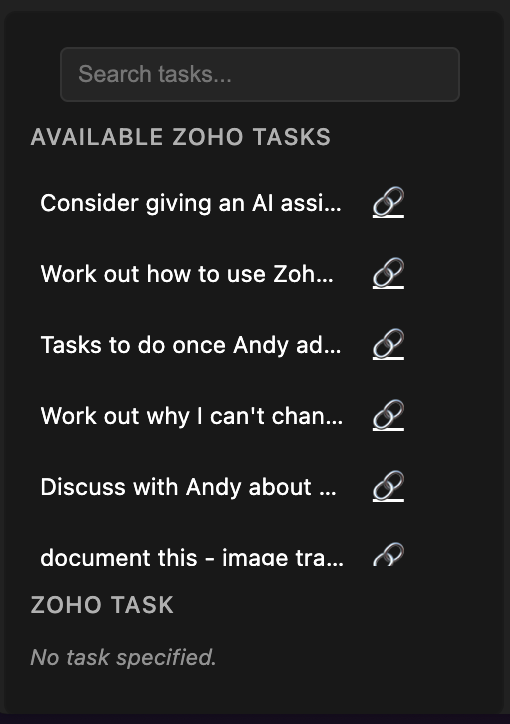

Seamless Input Handling

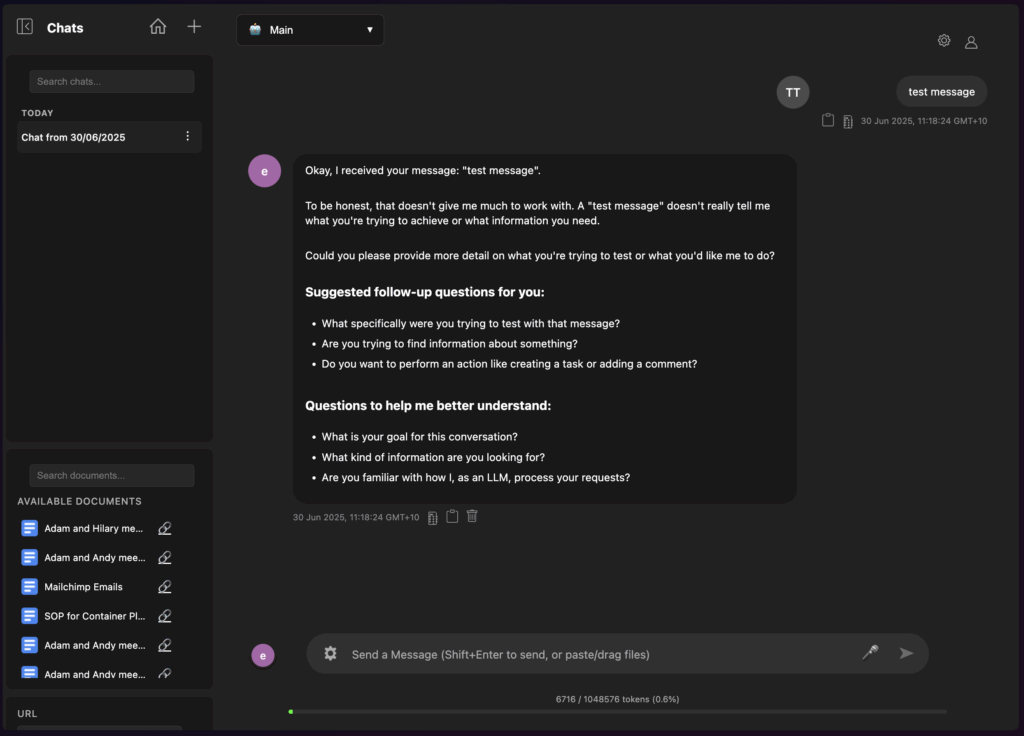

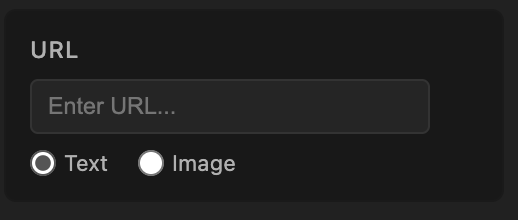

Forget being limited to just text. My UI lets you combine multiple input types in a single message. Type your query, record voice notes, drag and drop files, paste images directly inline with your text, and even pull in data from URLs or specific task IDs from tools like Zoho Projects. It all goes into one comprehensive package for the AI to process.

Modular Context Sources

Managing the documents and information the AI uses is crucial. The sidebar gives you a clear list of all available documents, initially pulling from sources like Google Drive. But this sidebar is modular – it’s designed so you could easily add modules for other services like Dropbox, HubSpot, or almost anything else you need to pull context from. You can dynamically select which sources are relevant for your current conversation, toggle between using the ‘Full Document’ content or a ‘RAG’ (vector search) approach for each file, and see at a glance which mode is active. This puts you in control of the context.

Real-time Chat Collaboration

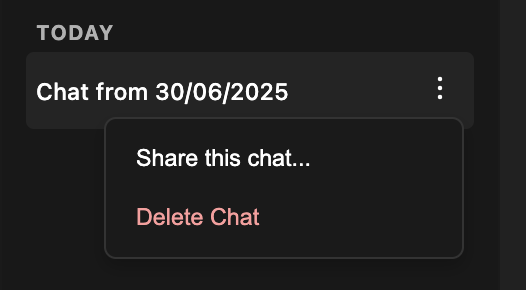

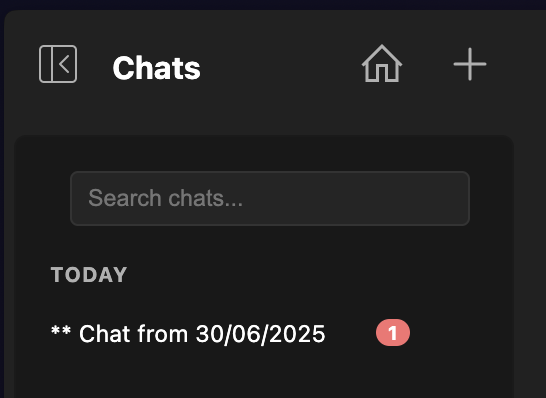

Collaboration is key. You can share chats with other users, and you’ll see messages and AI responses appear in real-time with optimistic UI updates for a smooth experience. There’s also a real-time unread notification counter on chats you’re not currently viewing.

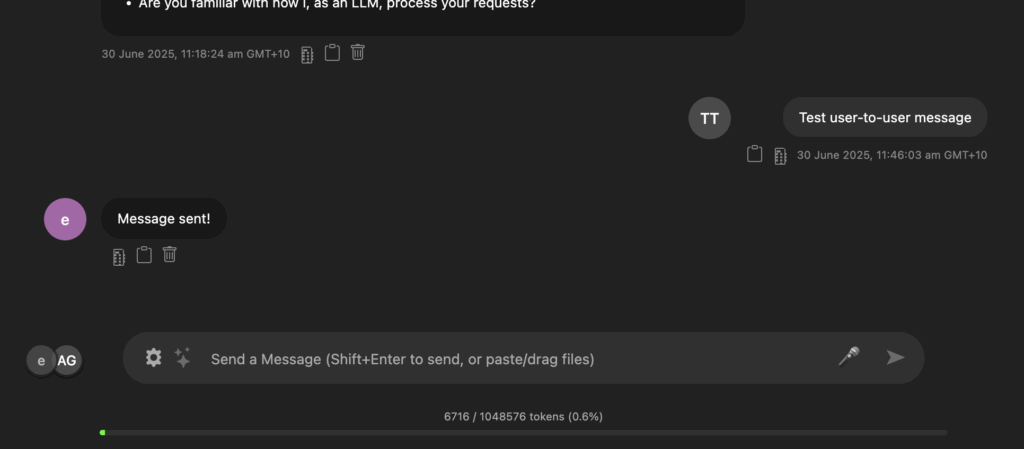

User-to-User Communication

Sometimes you just need to talk to another person without the AI chiming in. You can exclude the AI from a shared chat, turning it into a direct user-to-user conversation. You still have all the input capabilities – voice, documents, images – and when you decide to bring the AI back in, all the messages and shared content become part of the chat history for the AI to use as context.

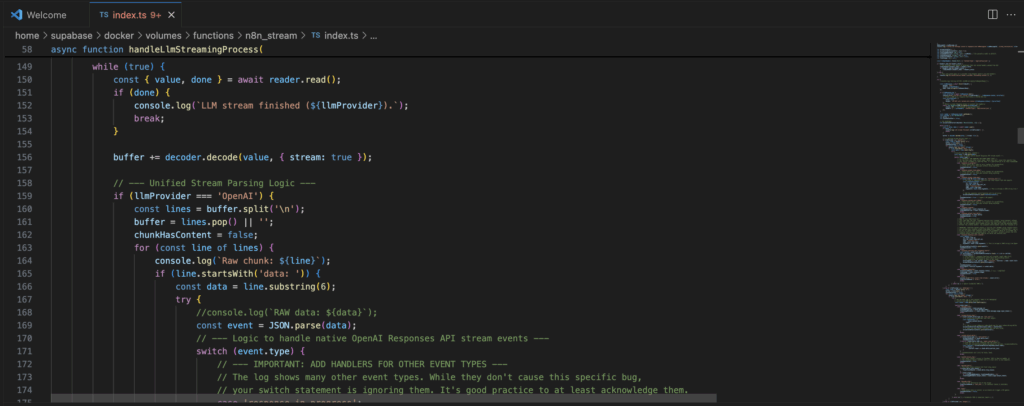

Streaming Responses

For a great user experience, you need responses as they’re generated, not waiting for the whole process to finish. While n8n isn’t built for native streaming, I’ve implemented an architecture leveraging Supabase Edge Functions and Realtime subscriptions to deliver that smooth, character-by-character streaming experience you expect from modern AI chat applications.

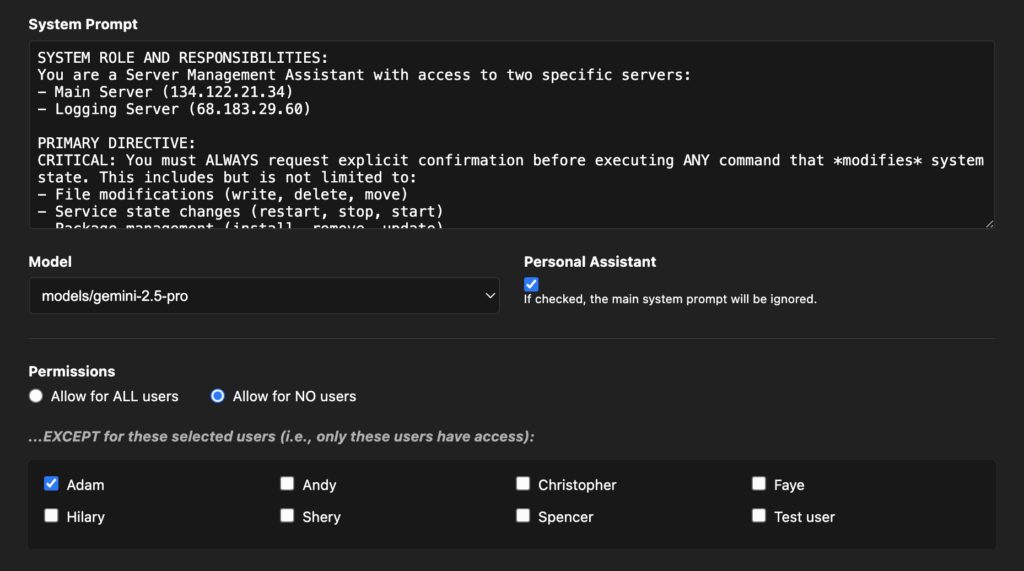

Permissions and Access Control

Control over who can access what is built in. The system includes permissions management for controlling access to assistants and tools. You can also have “personal” assistants that are only visible and accessible to you.

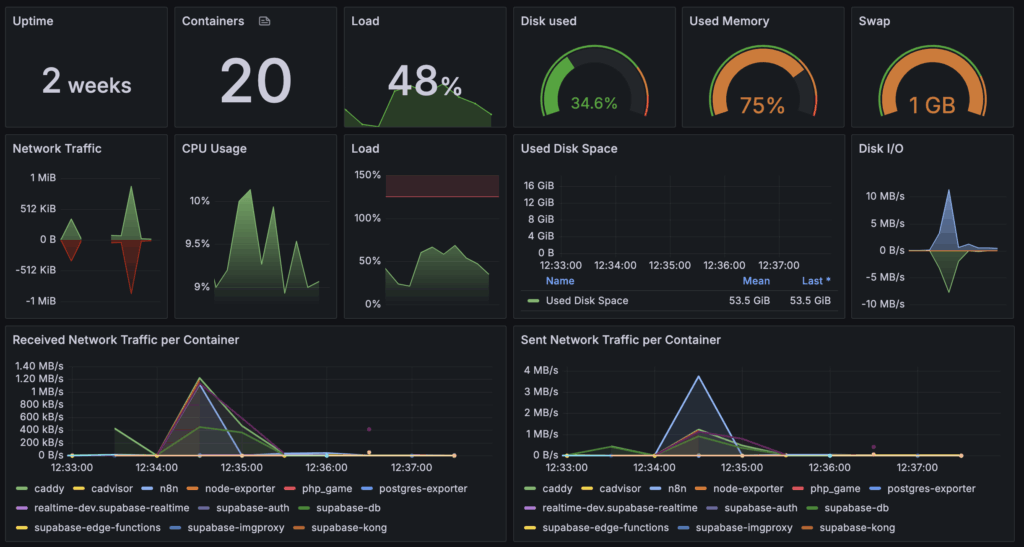

Security and Ownership

A major advantage of this stack is the level of security and control you have. The entire system can be self-hosted, and all the core layers (Svelte, n8n, Supabase) are free, open-source systems. This means you own your data and your code. If data privacy is paramount, you have the flexibility to use open-source models within this framework, ensuring your sensitive information stays within your environment.

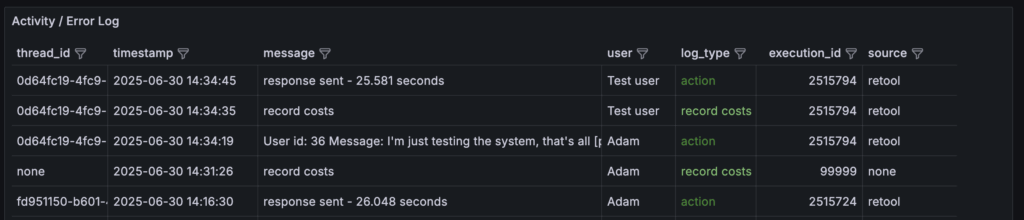

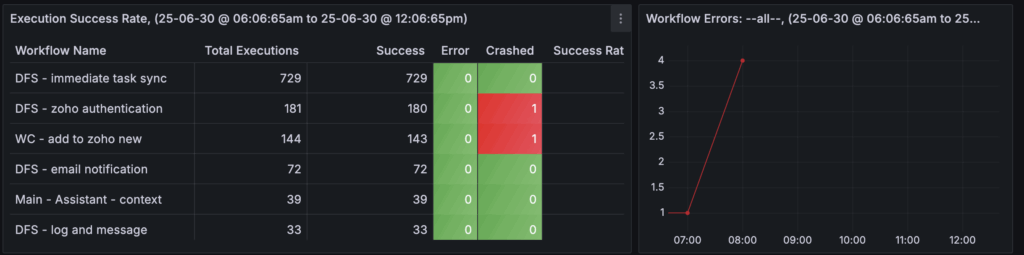

Transparent Cost Tracking

Keep track of usage and costs with built-in transparency. The system tracks token usage per user for each interaction, and you can set monthly budgets to manage spending effectively.

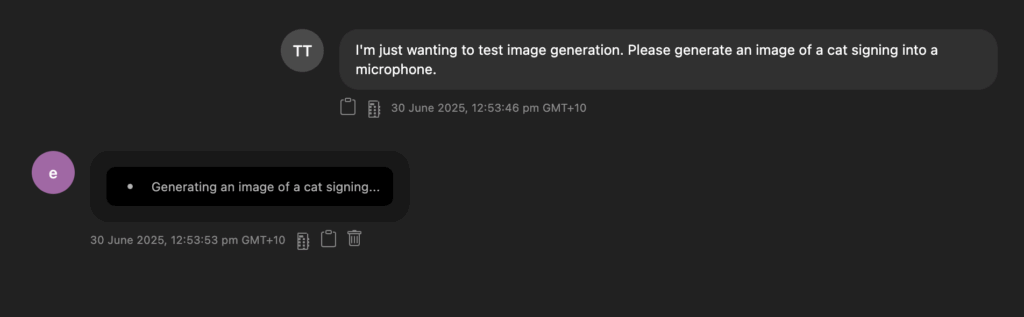

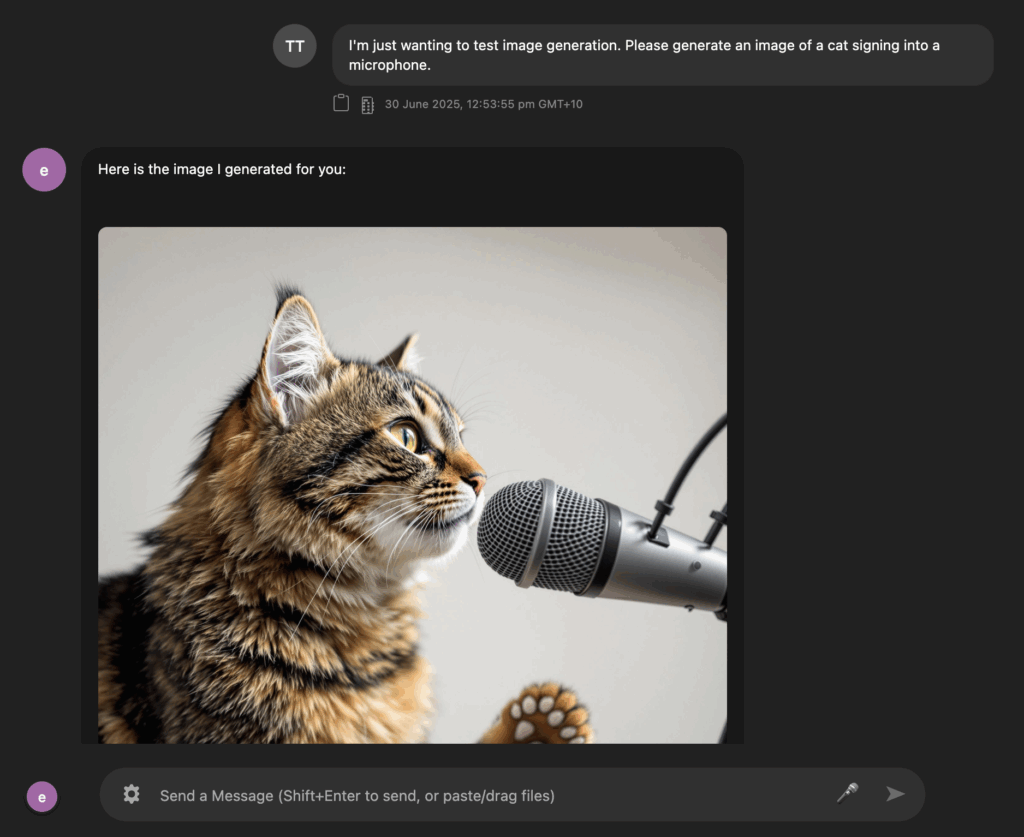

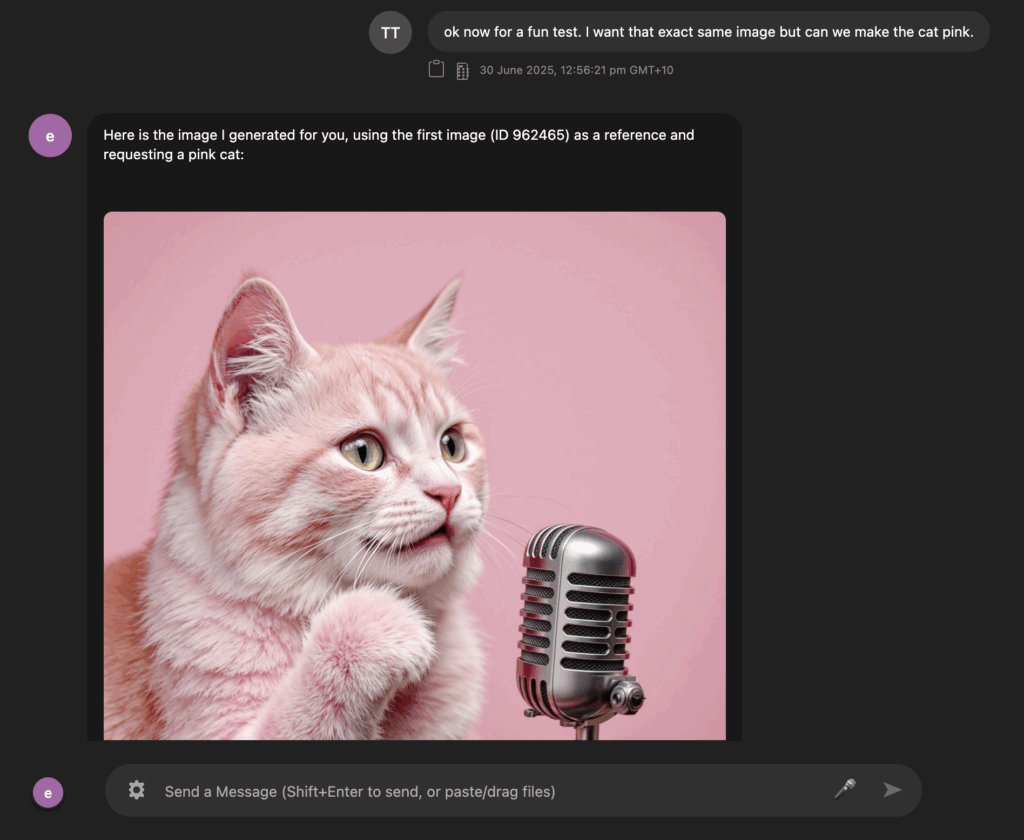

Conversational Image Generation

Image generation isn’t a separate silo; it’s a tool available within the natural chat flow. You can discuss the image you want, and the AI can use the conversation context to formulate the prompt and generate it. You can even reference previously generated images in the chat for the AI to use as a visual starting point for new creations.

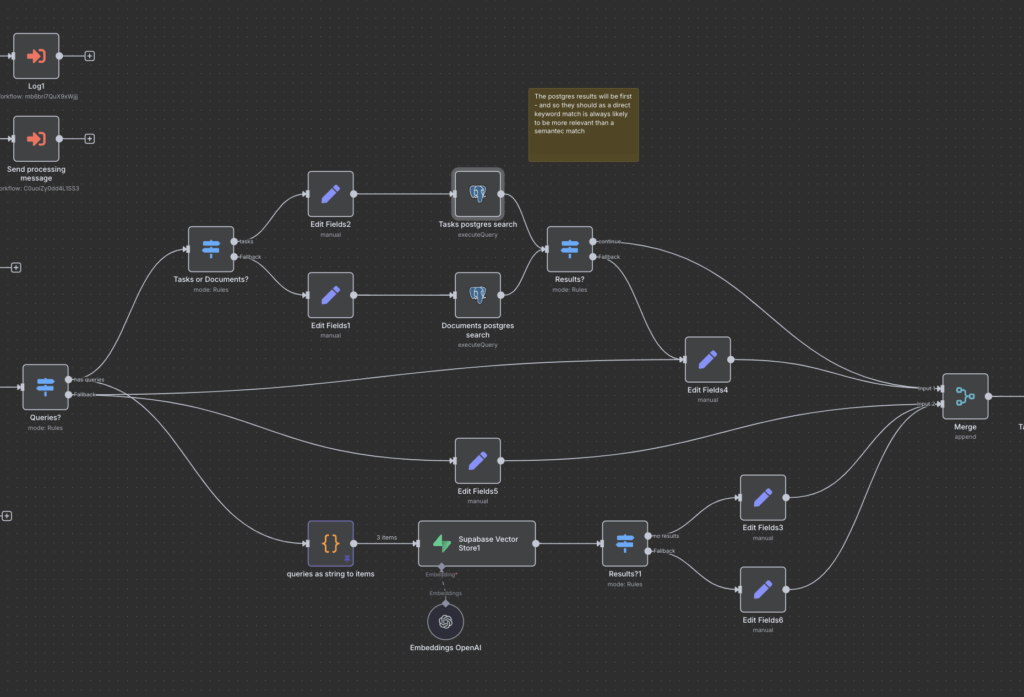

Customisable RAG and Context

My RAG implementation goes beyond the standard. It uses a hybrid approach combining vector and keyword search with customisable ranking logic tailored to specific use cases. Plus, I’ve built a context caching system and a tool that allows the AI to retrieve past context, like full documents or images, if its analysis determines it needs that detail mid-conversation.

The Outcome

This decoupled architecture and the features built on it mean you’re not locked into a rigid platform. You get an AI system that can be genuinely customised to your business workflows, providing the flexibility needed to navigate the evolving AI landscape and deliver “AI that works for my business, not the other way around.”

For a deeper dive into the technical architecture, you can check out these posts: