Multi-Model, Multi-Platform AI Pipe in OpenWebUI

-

demodomain

- . February 22, 2025

- 5,726 Views

-

Shares

Pipe is available here:

https://openwebui.com/f/rabbithole/combined_ai_and_n8n

Introduction

OpenWeb UI supports connections to OpenAI and any platform that supports the OpenAI API format (Deepseek, OpenRouter, etc). Google, Anthropic, Perplexity, and obviously n8n are not supported.

Previously I had written pipes to connect OWUI to these models, and n8n, but now I’ve combined all four into a single pipe.

This technical walkthrough explores the implementation of a unified pipe that connects OpenWebUI with Google’s Gemini models, Anthropic’s Claude models, Perplexity models, and N8N workflow automation.

Core Architecture

The pipe is built around a central Pipe class that handles routing, request processing, and response management. The architecture follows these key principles:

- Provider Abstraction: Each AI provider (Google, Anthropic, Perplexity) has its own request handler

- Unified Interface: Common parameters are standardized across providers

- Extensible Design: New providers can be added without changing the core logic

- Error Resilience: Comprehensive error handling at each layer

Key Components

Configuration Management

The pipe uses Pydantic’s BaseModel for configuration management:

pythonCopyclass Valves(BaseModel):

GOOGLE_API_KEY: str = Field(default="")

ANTHROPIC_API_KEY: str = Field(default="")

# ... additional configuration fieldsThis provides:

- Type validation

- Default values

- Documentation through Field descriptions

- Environment variable integration

Model Discovery

The pipe dynamically discovers available models from each provider:

pythonCopydef pipes(self) -> List[dict]:

all_pipes = []

all_pipes.extend(self.get_google_models())

all_pipes.extend(self.get_anthropic_models())

a# ... additional configuration

return all_pipesRequest Routing

The main pipe method implements intelligent routing based on model IDs:

- Strips prefix:

all_models.google.gemini-pro→google.gemini-pro - Identifies provider: Checks for keywords like “google”, “anthropic”, “claude”

- Routes to appropriate handler:

handle_google_requestorhandle_anthropic_request

Message Processing

Both Google and Anthropic handlers transform messages into provider-specific formats:

- Extract system messages

- Process text content

- Handle image attachments

- Apply safety settings

- Configure generation parameters

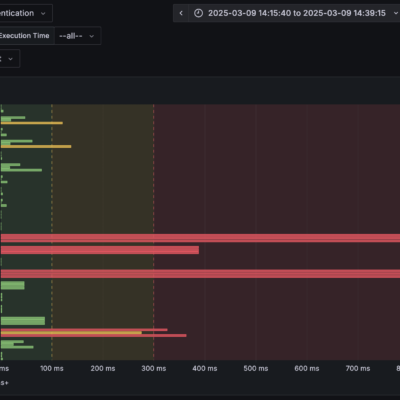

N8N Integration

The N8N integration provides real-time status updates through:

- Primary webhook for initial requests

- Status webhook for progress updates

- Asyncio-based polling loop

- Chain-of-Thought visualization

For the n8n integration:

- create an account here: https://webui.demodomain.dev/ to test the demo of this pipe chatting with a live n8n workflow

- pipe connects to n8n by sending user messages to an n8n webhook.

- pipe sets a looping function to call another n8n webhook, every x seconds to get status updates, and display the latest status in the UI

- updates from n8n can be “status”, “no update”, or “error”

- handles errors gracefully and shuts down the function that checks for updates on a loop

- uses OpenWebUi’s metadata.get(“chat_id”) for chat session management with n8n

- creates a collapsable element containing COT (Chain of Thought) tags above the final message

- see accompanying demo n8n workflow here: https://github.com/yupguv/openwebui/blob/main/n8n_workflow_openwebui_chat

- the demo n8n workflow optionally uses supabase. Table definitions are here: https://github.com/yupguv/openwebui/blob/main/openwebui_n8n_supabase_tables

Multi-modal Features

Image Processing

The pipe includes image handling:

pythonCopydef process_image(self, image_data):

"""Process image data with size validation."""

if image_data["image_url"]["url"].startswith("data:image"):

# Handle base64 encoded images

mime_type, base64_data = image_data["image_url"]["url"].split(",", 1)

# ... size validation

else:

# Handle URL-based images

url = image_data["image_url"]["url"]

# ... size validationSafety Controls

The pipe implements configurable safety thresholds:

pythonCopyif self.valves.USE_PERMISSIVE_SAFETY:

safety_settings = {

genai.types.HarmCategory.HARM_CATEGORY_HARASSMENT:

genai.types.HarmBlockThreshold.BLOCK_NONE,

# ... additional categories

}Response Streaming

Both Google and Anthropic handlers support streaming responses:

pythonCopydef stream_anthropic_response(self, url, headers, payload):

"""Handle streaming responses from Anthropic"""

with requests.post(url, headers=headers, json=payload, stream=True) as response:

for line in response.iter_lines():

# ... process and yield chunksImplementation Challenges

1. Provider Differences

Each provider has unique requirements:

- Different message formats

- Varying parameter names

- Distinct error handling needs

Solution: Abstract common patterns while preserving provider-specific optimizations.

2. Image Handling

Challenges included:

- Size limits

- Format validation

- Efficient processing

Solution: Unified image processing with provider-specific validations.

3. Error Resilience

Key considerations:

- API timeouts

- Rate limits

- Invalid responses

Solution: Multi-layer error handling with graceful degradation.

The modular design allows for easy extension to support additional AI providers or workflow automation tools in the future.

21 Comments