The Open WebUI RAG Conundrum: Chunks vs. Full Documents

-

demodomain

- . February 20, 2025

- 33,361 Views

-

Shares

Open Web UI’s RAG implementation

- Uploaded files are processed using Langchain document loaders. Different loaders are used based on file type (PDF, CSV, RST, etc.). For web pages a WebBaseLoader is used.

- After loading, the documents are split into chunks using either a character-based or token-based text splitter (Tiktoken is used for the latter) with configurable chunk size and overlap.

- These chunks are embedded using a SentenceTransformer model, chosen through settings or defaults to sentence-transformers/all-MiniLM-L6-v2. Ollama and OpenAI embedding models are also supported.

- The embeddings, along with the document chunks and metadata, are stored in a vector database. Chroma, Milvus, Qdrant, Weaviate, Pgvector and OpenSearch are supported.

- When a user submits a query, a separate LLM call is made to generate search queries based on the conversation history.

- This uses a configurable prompt template, but by default encourages generating broad, relevant queries unless there’s absolute certainty no extra data is needed.

- This step can be disabled through admin settings if not desired.

- The generated search queries are used to retrieve relevant chunks from the vector database. The default setting combines BM25 search and vector search.

- BM25 provides a keyword-based search, while vector search compares the query embedding with the stored chunk embeddings.

- Optionally, retrieved chunks can be reranked using a reranking model (like a CrossEncoder) and a relevance score threshold is applied to filter results based on similarity.

- The retrieved relevant contexts (chunks with metadata) are formatted into a single string.

- This string, along with the original user query, is injected into a prompt template designed for RAG.

- This template is configurable but defaults to one that instructs the LLM to answer the query using the context and include citations when a <source_id> tag is present.

- The final prompt, including context and user query, is sent to the chosen LLM.

- The LLM’s response, which may include citations based on the provided context, is then displayed to the user.

- routers/retrieval.py: Handles the API endpoints for document processing, web search, and querying the vector database.

- retrieval/loaders/main.py: Contains the logic for loading documents from different file types.

- retrieval/vector/main.py: Defines the interface for vector database interaction and includes implementations for Chroma and Milvus.

- retrieval/vector/connector.py: Selects the specific vector database client based on configured settings.

- utils/task.py: Contains helper functions for prompt templating, including rag_template.

Ways to get files into OpenWeb UI

Just so everyone is on the same page about what’s possible, I thought I’d outline the options:

- Drag and drop a file into the prompt.

- If it’s a document (not an image) it will get ragged into a temporary knowledge base called “uploads”.

- But… you can click on the file and select “Using Focused Retrieval” which means “send the full content, not chunks” – awesome.

- Create a knowledge base, add your files. Then link the knowledge base to your model (see my post on creating your own CustomGPT in OWUI).

- Your documents will get RAGged. Nothing you can do about it.

- Same as option (2) above, but you don’t link the knowledge base to your model. When you want to send one or more files, enter # as the first character of your prompt and select from the knowledge base one or more files (or the whole knowledge base, if you like).

- Again, everything is RAGged.

The Open WebUI RAG Roadblock

User Input: The user types something into the chat input.

Middleware (in open_webui/backend/middleware.py):

The request goes through middleware, specifically process_chat_payload.

process_chat_payload is where the RAG logic is always applied, regardless of whether a custom pipe is being used.

It checks for features.web_search, features.image_generation, and features.code_interpreter to see if those should be enabled.

Crucially, it always calls get_sources_from_files if there are any files. This function is the heart of the RAG system.

The RAG template (RAG_TEMPLATE) is always prepended to the first user message, or a system prompt is added if one doesn’t exist.

get_sources_from_files (in open_webui/backend/retrieval/main.py)

- If RAG_FULL_CONTEXT is True, then the entire document is returned from all specified sources. The context returned from the function does NOT get chunked or embedded but still only returns the text content from the document (no binary or base64 of the file can be accessed by a pipe)

- If RAG_FULL_CONTEXT is False (the default), then chunks are retrieved as before. The number of chunks can be configured via the RAG_TOP_K config setting. The function will then call the embedding function and use that as your query embeddings in the vector db.

if “### Task:\nAnalyze the chat history” in system_content:

print(“Detected chat title generation request, skipping…”)

return {“messages”: messages}

A Workable Workaround (Minimal Core Modification)

LATEST UPDATE 23rd Feb 2025…

The Final Solution: Breaking Free from the Flying Dutchman

I’ve re-written this blog post 3 times as each day I find new information and discover possible solutions.

OWUI’s forcing of RAG, even with options for “full documents” felt quite like the tale of Bootstrap Bill Turner and Davy Jones. OpenWebUI’s RAG system can be an unwanted passenger, binding itself to my custom pipe like Bootstrap Bill bound to the Flying Dutchman. Every time I tried to process a document, OpenWebUI’s RAG would inject itself into the process, like a symbiotic entity I couldn’t shake off. I needed to stop it somehow.

Here’s what I’ve now implemented in a rather complex and long pipe – but remember how at the start of this post I mentioned how everyone has a very specific environment, and tech stack, and use-case when it comes to RAG? Well I do to. I want to be able to connect to all unsupported models (Perplexity, Google, Anthropic) and also connect OWUI to an n8n workflow. And I have very specific requirements about how the prompt, conversation history, documents (chunks, full text, binary) are handled depending on the model.

So it’s just too convoluted to share as people will inevitably have questions and there’s a limit to the time I can put in. But I do have demos of pipes and n8n workflows on my github that comprise the concepts I’ve discussed in here. It’s just that final solution is very much coded for my use-case, and it’s 1,500 lines long.

I encourage you to take what I’ve learned, look at the demos I have, and build out your own solution suitable specifically for you.

Here’s what I implemented:

- Firstly, my pipe looks at all settings and if the settings are such that the internal RAG pipeline will return actual “chunks” instead of full document “chunks” then the pipe knows that the “chunks” that are attached to the RAG template and injected into the pipe / messages are in fact actual “chunks”. Otherwise it knows the chunks are full document “chunks”.

- In addition it looks at the <source_id> tags that OWUI uses when injecting chunks into the prompt, to work out what the exact file is that’s related to each chunk. It then adds a new tag into the results, <filename>. This means the user can actually mention a filename to the model and the model will know where to look

- If actual chunks are being returned, then the pipe then checks if the first character of the user prompt is the “-” character, and if it’s there, this is essentially a message from the user saying, “I want to disable all RAG for this turn of the conversation.” So the pipe strips out the chunks, and the RAG template entirely from the prompt – only the user’s prompt, chat history, and system message are sent.

- If actual chunks are being returned, and there’s no “-” as the first character of the use prompt, the pipe then checks if the first character of the user prompt is the “!” character, and if it’s there, this is a message from the user saying, “I want full document text to be sent on this turn of the conversation.” So the pipe strips out the chunks, reads in the actual file contents, and inserts the full content to where the chunks were

- The method to get the right metadata to get the file ID is different depending on whether the chunks are returned from a file in the knowledge base or from a file that was uploaded in to the prompt.

- For Google, Anthropic, and Perplexity, if it’s an image, it grabs the base64 (which is easy because it’s just part of the user input) and sends it along to those models.

- NOTE: image files can’t be put into a knowledge base and you can’t select an image file and toggle on “Using Focused Retrieval” because there’s no text content in an image (obviously) so OWUI (obviously) doesn’t trigger any RAG processes

- For Google, if the chunk refers to a PDF and the settings are such that the user wants full content, and the valve is set to perform the following function, the actual binary of the PDF is sent to Google because I like how Google does PDF OCR.

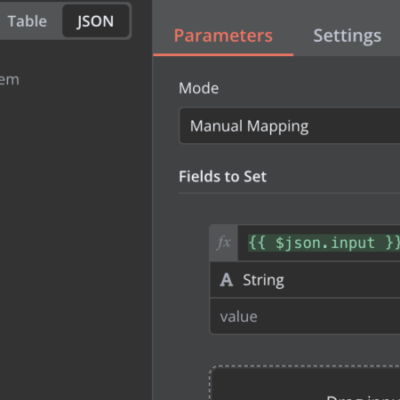

- For n8n, I want to handle all RAG, and all chat history. (I’m using OWUI as an interface, my logic layer is all n8n, and my data layer is Supabase). So, regardless of any settings, any RAG process, or anything, it will:

- read in the original file that’s part of the prompt (from the knowledge base, or uploaded, image or document) and sends the base64 of the file and the last user message to n8n

- waits for a response, updating the status in OWUI every 2 seconds

- updates the status in OWUI whenever n8n calls a tool or executes a sub-workflow

- receives the response from n8n

- extracts any <think> elements for display as collapsable elements in OWUI

- displays the response + <think> element

Summary

With the OWUI-provided admin-level and uploaded file-level settings to use full content or not, combined with prompt-level ability to disable RAG (-), or force full content (!), and the fact that a single pipe handles multiple models plus an n8n workflow, I think I’ve finally proven to myself that is IS possible to… well, not work around, but work within OWUIs RAG implementation and get reasonable flexibility for switching between RAG chunks and full document chunks and binary files.

Technical Footnote

I discovered a bit of a challenging bug. Here’s how OWUI format the <source> and <source_id> tags:

<source>

<source>1</source_id>

content here

</source>

See the issue I’ve set to bold? That took about 6 hours to notice, wondering why my regex extraction of the “content” (<source>) kept failing!

24 Comments