The Illusion of AI Knowledge: Making LLMs Work for You Without Overreliance

-

demodomain

- . February 13, 2025

- 494 Views

-

Shares

Large Language Models (LLMs) have revolutionized how we interact with information, creating the illusion of knowledge and understanding. While impressive, it’s crucial to remember that LLMs don’t “know” in the human sense. They are sophisticated pattern-recognition systems, statistically predicting appropriate responses based on vast amounts of training data. This creates the appearance of insight, but it’s distinct from the synthesis, risk-taking, and intuition of human expertise. So how can we effectively leverage these powerful tools without falling prey to the illusion of true understanding?

The Nature of LLM “Knowledge” (and its Limitations)

LLMs excel at synthesizing information, presenting it clearly, and suggesting broad frameworks. They offer breadth, exposing us to new approaches grounded in existing text data. However, they lack the depth that comes from iterative, experiential learning. A seasoned expert can push back not just based on “what’s written,” but on hard-won lessons and nuanced judgment. LLMs, on the other hand:

-

Lack the depth of experiential learning.

-

Rarely challenge assumptions unless explicitly prompted.

-

Don’t truly understand concepts; they model linguistic patterns.

-

Operate within token limits, constraining their processing capacity.

This inherent limitation impacts how we can effectively use them, especially when dealing with large datasets or complex brainstorming tasks. Uploading all your documents won’t magically imbue the LLM with comprehensive knowledge; token limits restrict the amount of data it can process simultaneously. Even Retrieval-Augmented Generation (RAG), which feeds relevant chunks of information to the model, struggles with broad, open-ended queries because the retrieval system may misinterpret relevance in creative tasks.

The Elusive Nature of Insight (and the Role of Human Expertise)

True insight often emerges from friction—challenges to our ideas and assumptions. An expert’s response is grounded not just in facts, but in intuition and the willingness to risk disagreement. While LLMs can offer “devil’s advocate” arguments when prompted, these arguments remain derived from existing patterns, not lived experience. This distinction is crucial for avoiding overreliance and maintaining critical thinking.

Making LLMs Work for You: Strategies for Effective Utilization

LLMs are powerful tools for idea generation, synthesis, and providing a “statistical” perspective. However, maximizing their effectiveness requires a collaborative approach:

-

Idea Generation: Leverage LLMs to outline options, frameworks, and approaches, capitalizing on their breadth and clarity.

-

Critical Evaluation: Engage human expertise to interrogate LLM outputs, test assumptions, and incorporate personal experience. This “friction” is where breakthroughs happen.

-

Iterative Refinement: Return to the LLM with refined questions and insights gleaned from human evaluation, creating a loop that bridges breadth and depth.

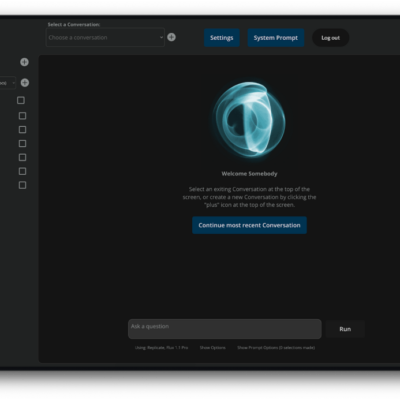

Extending LLM Capabilities (Without Building from Scratch)

While fine-tuning and RAG enhance LLM utility, they don’t grant omniscience. Fine-tuning adapts the model to specific patterns and data, akin to training it to speak with your business’s “voice.” Embedding-based methods, using vector databases like Pinecone, allow dynamic querying of your content without retraining the entire model. Fortunately, you don’t need to build a model from scratch to achieve significant benefits. Leverage existing tools and strategies:

-

Fine-tuning/Instruction-tuning: Tailor the model’s behavior and responses.

-

Embedding + Retrieval Systems: Access relevant knowledge dynamically.

-

Specialized Prompt Engineering: Design workflows for incremental data processing.

-

API-based Grounding: Connect the LLM to external tools and databases.

Brainstorming with All Your Data (A Practical Approach)

Effective brainstorming with large datasets requires a strategic combination of:

-

Retrieval Strategy: Use embeddings to surface relevant data chunks.

-

Summarization Strategy: Condense documents into manageable representations.

-

Workflow: Create multi-step interactions for incremental retrieval, synthesis, and integration of ideas.

Platforms like LangChain and OpenAI functions facilitate this process.

Practical Recommendations for a Feasible Setup:

-

Utilize a Vector Database: Store and query document embeddings efficiently.

-

Leverage Fine-tuning Sparingly: Focus on tone, style, and specific behaviors, not uploading entire datasets.

-

Consider Enterprise Solutions: Explore platforms like ChatGPT Enterprise for deeper customization.

-

Iterate Workflows: Combine summaries, embeddings, and prompts for dynamic data interaction.

The Danger of Overreliance (and the Importance of Critical Thinking)

The “appearance of knowledge” can be deceptive. Overreliance on LLMs without critical evaluation risks misinterpreting outputs as expert opinion and suppressing essential human judgment. This is particularly crucial in domains requiring nuance, moral judgment, or hands-on experience.

Final Thoughts: A Collaborative Future

LLMs are powerful tools, not replacements for human collaborators. They excel at organizing thoughts, providing options, and validating thinking to a degree. However, the real work—pushing boundaries and challenging assumptions—still requires human insight. By combining the strengths of AI with human intuition and experience, we can unlock true potential and avoid the pitfalls of overreliance. The path to deep insight lies in this collaborative approach, acknowledging the limitations of LLMs while harnessing their power for effective knowledge exploration.